IoT & Embedded Technology Blog

Practical Edge AI: Takeaways from Qualcomm’s Latest Chipset Demos

by Joe Abajian | 10/09/2025

Qualcomm sponsored VDC's travel to Snapdragon Summit 2025.

This year, the event featured dozens of high-performance on-device AI demonstrations. All newly announced Qualcomm chipsets include a dedicated NPU, which enables offline, isolated AI. On-device AI has several benefits that engineering organizations have historically struggled to leverage due to compute constraints. Most notably, running AI models on mobile or edge devices reduces cloud computing costs. Running models in the cloud is a significant cost burden for many organizations. As model providers transition to pricing models that yield better margins, running models on-device will grow in importance.

As hardware has improved, model optimization has advanced simultaneously, creating smaller models that are significantly more accurate than their larger, older counterparts. Model and chip performance improvements have enabled on-device AI with real commercial value.

Here are some of the most interesting live AI demonstrations from Snapdragon Summit 2025:

Computer Vision Capture for Biomechanics

Ever questioned your golf swing? Most golfers have. Luckily, Qualcomm’s latest mobile SoCs can run on-board computer vision models that provide live diagnostics. Commercially, this use case has strong potential across industries. Live biomechanical tracking is a powerful tool for worker safety, smart retail, and general security systems.

In industrial settings, continuous monitoring of worker posture, movement, and interaction with machines can prevent process errors, accidents, and injuries. It can also assist with training new employees with real-time feedback. In retail environments, biomechanical analytics adds a layer to traditional surveillance and anti-theft measures. Several asset protection solutions use computer vision to detect theft. An on-device AI-enabled solution would reduce costs associated with smart asset protection. Similarly, retailers can also bring vision-based solutions that optimize store layout by tracking customer activity out of the cloud and onto the edge.

Voice Activated On-device LLMs

To emphasize that AI is the new UI, Qualcomm demonstrated the power of fully voice-enabled LLM interactions. While the tech itself took a little tinkering and patience, voice-enabled LLM queries will help mobile and frontline workers safely access AI while in the field—regardless of internet availability. Additionally, voice-based LLM prompting is the foundation of Qualcomm’s agentic AI vision, which includes accessing specialized agents based on real-time needs via voice prompts.

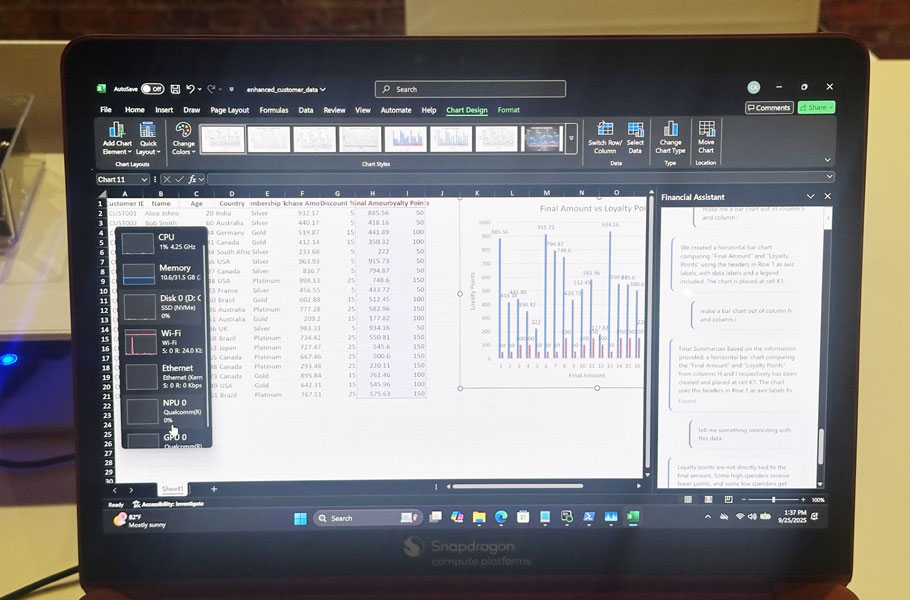

AI is a Reliable Assistant Across Applications

With the emergence of industrial copilots, demand for specialized AI assistants will grow rapidly across industrial IoT and embedded verticals. In the photo above, Qualcomm demonstrated a specialized assistant for Microsoft Excel that ran locally on an X2 chip. Obviously, most engineering organizations need assistants capable of functions more complex than averaging data in Excel, but this demo showed the potential for low latency, local AI assistants. In many security-critical industries, engineers and workers are prohibited from using AI assistants due to perceived security risks. With local AI, workplace and engineering acceleration can take place without the risks of accessing a public cloud.

Other Demos and Conclusion

Beyond the demonstrations featured above, Qualcomm targeted several other use cases, including smart home technology, agentic features for mobile phones, and wearable technology. Much of Qualcomm’s AI vision is yet to come fully to fruition, but its commitment to on-device AI is clear. With Qualcomm and other silicon leaders supporting edge AI initiatives, the number of applications running at the edge will continue to grow. In tandem, specialized models will run on a diverse array of edge devices suited to specific industries and environments.

For more coverage of the future of edge AI models and development, keep an eye out for the second iteration of VDC’s upcoming Edge AI Development Solutions research. You can also view the 2024 executive brief here.