Intel Struts Its Stuff for Autonomous Driving

Amid presentations from senior executives and demos from partners, on May 3 Intel invited dozens of press and analysts from around the world for Autonomous Driving Day, literally rolling up the garage door on its new autonomous driving engineering facility in the former Altera building in San Jose, California.

One of Intel’s autonomous driving test vehicles

Although none of the individual technologies was being shown for the first time, it was the first time they had been presented collectively, showcasing the strategic importance of autonomous driving for Intel’s future.

Doug Davis, Senior vice president and general manager of Intel’s Automated Driving Group, discusses his mission to bring Intel autonomous driving technology to the masses

A demonstration using Microsoft’s HoloLens enabled attendees to see augmented reality visualizations of data flowing between vehicle and infrastructure via 5G wireless.

Microsoft HoloLens for augmented reality visualization of streaming data communications

Some of the augmented reality visuals as seen through HoloLens

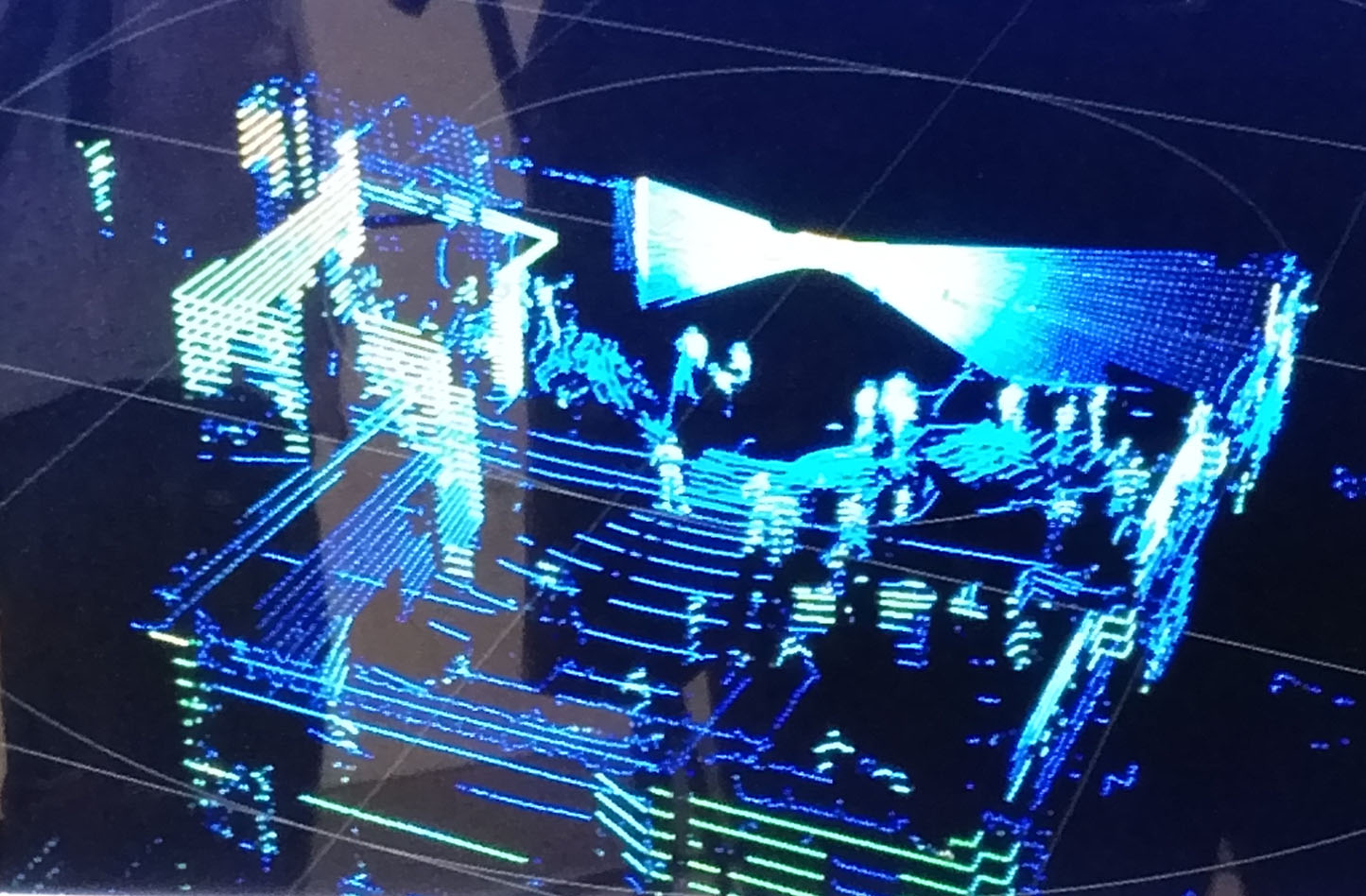

A live 5G link using Ericsson communications hardware streamed data from a LiDAR (laser-based) scanning sensor in the garage, to a roof-mounted 5G antenna on a vehicle with a trunk-mounted Intel processing computer, which displayed a real time 3D representation of the cars and people in the garage.

Cutaway view of roof-mounted 5G shark fin antenna

LiDAR real time 3D view of the garage area

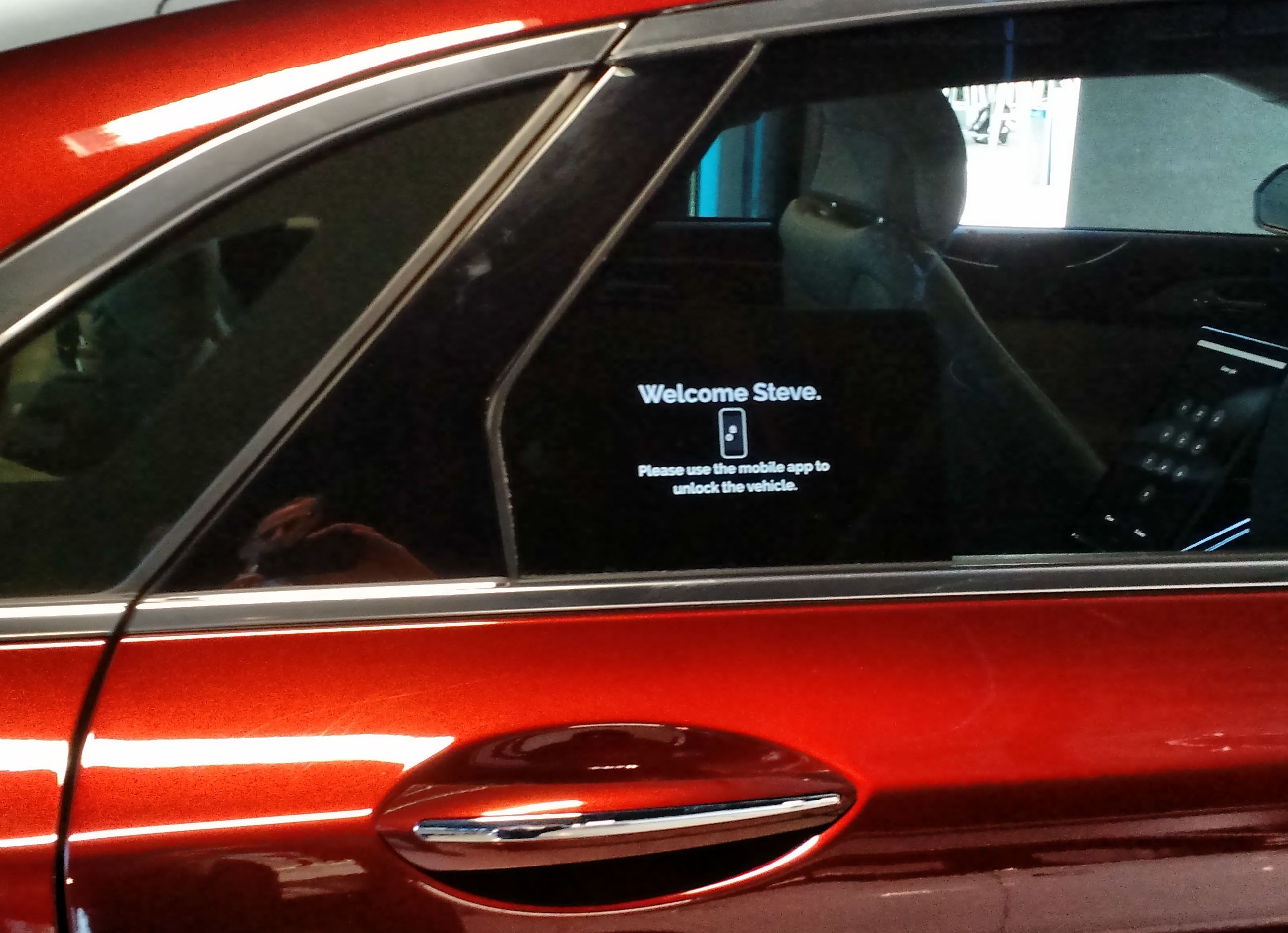

Intel also demonstrated some of its simulations for the human-machine interface of an autonomous ride sharing vehicle, putting quite a bit of thought into the behavioral aspects of such an interaction.

Ride sharing HMI demo

BWM is a major strategic partner of Intel’s in the automotive space, and rumor has it that BWM, as an early user of Mobileye technology, was the catalyst for Intel’s acquisition of that company in March of 2017. At Autonomous Driving Day, BMW brought along one of its 40 autonomous test vehicles, the first of its fleet to be licensed for road testing in the US.

BWM autonomous test car

Additional demos in the garage included: Intel’s GO automotive SDK; HERE live updating maps; Delphi’s Integrated Cockpit Controller (processing for both an infotainment system and an instrument cluster from the same SoC running a hypervisor); a deep learning algorithm residing in a low-power-consumption Arria 10 FPGA (one of the products Intel acquired with Altera); and Wind River’s Helix CarSync platform for over-the-air firmware updating.

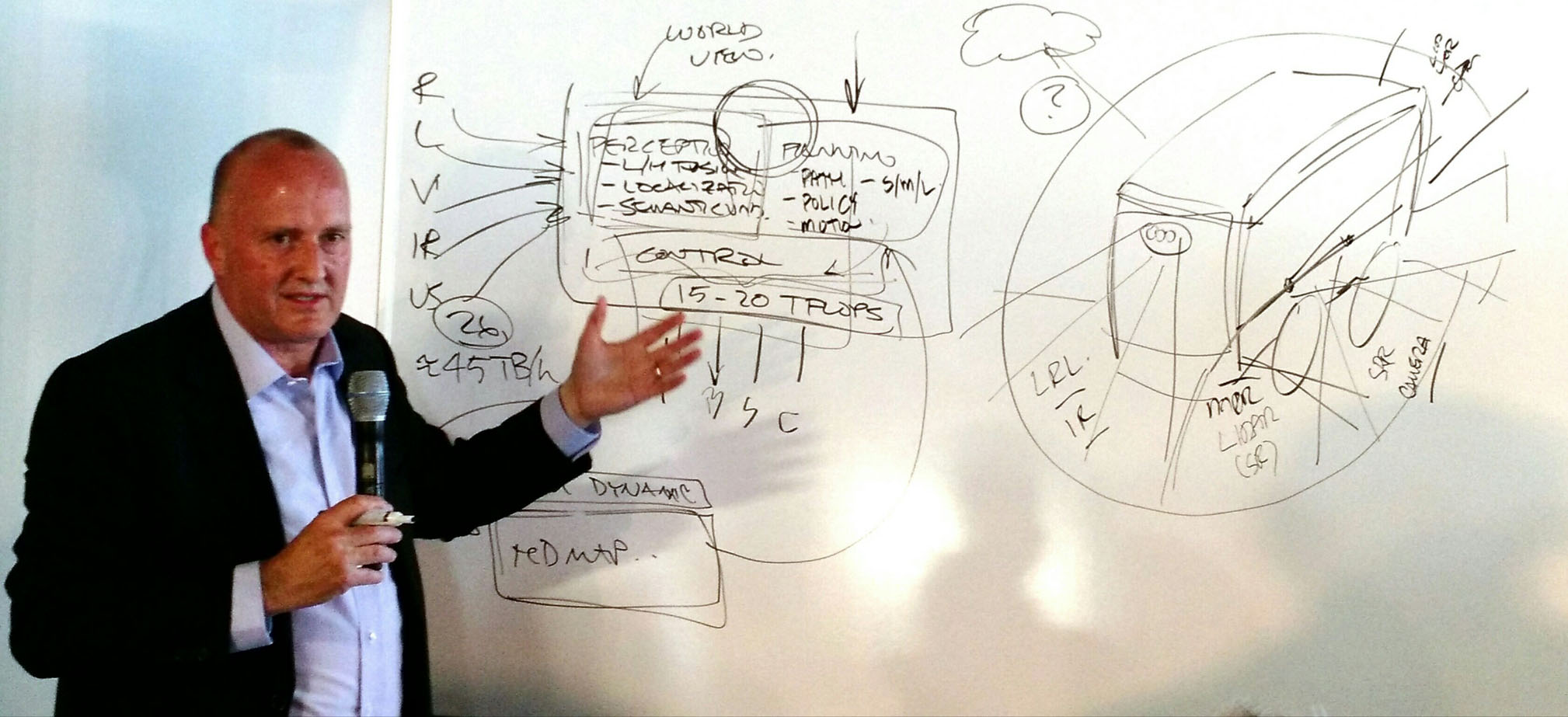

The event also featured a series of “chalk talks” by Intel and partner executives, such as Glen de Vos, Delphi’s chief technology officer, describing that company’s “secret sauce” for handling autonomous vehicle data. In his talk, de Vos noted that a single autonomous car can generate 45 terabytes of raw data per hour, which will require 15 to 20 teraflops of processing power, about 10 to 20 times more than the processing power in today’s vehicles. Jason Waxman, vice president and general manager for Intel’s Data Center Solutions, later noted that about 4 terabytes per day of that data needs to go to a datacenter for processing. Today, that’s done for autonomous test vehicles by physically moving hard drives. Waxman elaborated that once autonomous vehicles become commonplace, a major challenge will be scaling up datacenters to handle the volume.

Delphi’s CTO Glen de Vos, explaining the complexities of autonomous vehicle data

But the star of the event was unquestionably Delphi’s autonomous test vehicle, in which many of the attendees (including yours truly) got a test ride on the streets of nearby Santa Clara. Unlike most autonomous test cars that have multiple gadgets prominently affixed to their roofs, this modified Audi SQ5 had 26 sensors (10 radar, 10 cameras, and 6 LiDAR) integrated into its bumpers and inside of its front and rear glass, making it appear to be a conventional vehicle (except for its bold graphics exclaiming otherwise).

Delphi’s autonomous Audi test car

The test drive was accompanied by two Delphi engineers, one of whom is legally required to be in the driver’s seat to take over in the event of a failure of the autonomous system, but no such intervention was needed. In autonomous mode, the car drove us amidst the normal Silicon Valley afternoon traffic, changing lanes, turning, and stopping at busy traffic intersections without any prompting, as the engineer in the front passenger seat explained how the vehicle was processing all the sensor data. Several intersections in the area were specially equipped with DSRC (direct short-range communications) devices that wirelessly communicated traffic status and potential alert information with a compatible radio in the vehicle. Although DSRC wasn’t necessary for the vehicle to function, it improves overall behavior by adding yet another set of data to the vehicle’s copious sensor input.

This was our first ever ride in an autonomous vehicle on public roads (as opposed to a cordoned off area in a parking lot). The ride was a bit bumpy due to the Audi’s sporty suspension and the rough road surface, but we were particularly impressed by how the car behaved just like a normal human driver, quickly accelerating, for example, when red lights turned green, rather than tentatively inching out. About the only things that would have made it more like a normal human driver would have been the abilities to honk the horn and flash a virtual middle finger at other drivers who make boneheaded maneuvers.

Intel’s Market Position

Altogether, the event admirably conveyed the extent to which Intel is serious about pursuing the autonomous driving market. Not only does that market represent the potential for high unit volume, it has uniquely intensive processing power requirements (as well as power consumption constraints). The electronics in conventional (non-autonomous) vehicles are becoming increasingly sophisticated, particularly for infotainment systems, and ADAS (advanced driver assistance systems), the latter being a stepping stone to autonomous functions. But full autonomy requires an order of magnitude more processing, particularly for the numerous imaging sensors involved (visual cameras, radar, LiDAR) and the sensor fusion processing necessary to create a comprehensive and continuously updated car’s-eye-view of its surroundings.

Intel competitor ARM recently introduced its Mali-C71 image signal processor technology for the automotive market, which is aimed at processing raw image data prior to forwarding the data for sensor fusion processing. ARM is the dominant processor architecture for numerous microcontrollers and microprocessors in today’s vehicles, monitoring and controlling everything from keyless entry and lighting to engine and braking systems, as well as ADAS. However, ARM is not currently a significant player in the more processing intensive chips for imaging sensor fusion in fully autonomous vehicles.

NVIDIA, best known for its graphics processor units (GPUs) for gaming, is competing more directly with Intel in the autonomous driving market. NVIDIA is now aggressively pushing its DRIVE PX 2 in-vehicle hardware platform, DriveWorks software development toolkit, and GDX-1 datacenter servers (to handle analytics of car data).

Intel’s range of x86-based processors—from Atom to Xeon—along with its FPGAs, gives it a strong competitive position for both in-vehicle and datacenter applications for autonomous driving. While Intel might have missed the giant wave of the mobile phone market, Autonomous Driving Day demonstrated that the company has no intention of making that same mistake in the forthcoming autonomous car market.

View VDC's 2019 IoT & Embedded Technology Research Outline to learn more.